Renovating with a Green Tinge

We have recently renovated our 1915 vintage house in Neutral Bay, Sydney. Both my wife and I have PhDs in Atmospheric Sciences and both felt strongly that the new build should be as energy efficient as possible. The design is thermally efficient, including passive cooling features. We recycled huge numbers of bricks from the demolition in order to limit the carbon-footprint of the build itself. Finally, we installed a heat-pump hot water system and 2.2kW of solar panels so as to limit the ongoing carbon footprint of our family of five. (We considered batteries, but found we could not afford them, so we're still very much connected to the grid for our power needs at night and on cloudy days like today.)

We moved into our renovated home six months ago and this post provides some thoughts on what we have learned - just what shade of green are we?

Our Installation

We have:

- a 315L Sanden "Eco®" Hot Water Heat Pump System that we purchased through the the Eco Living Centre (who come highly recommended);

- 2.2kW of solar panels installed on a roof that faces slightly north of west;

- micro-inverters that report panel generation via ethernet over powerline; and,

- a "MyEnlighten" monitoring solution that reads power generation data and provides it to a cloud based monitoring solution from Enphase Energy.

This serves a family of five.

Like many others with solar panels, we generate surplus energy during the day that we sell to the grid for 8c per kWh. At night, we draw from the grid, purchasing energy at 40c per kWh. As I said, we do not have a battery system as we could not afford one.

My primary motivation for choosing a Sanden system was as a means of storing excess energy from our solar panels - it is my battery proxy.

Hot Water from Sunshine?

The Sanden heat pump draws 1kW. According to the manual, a tank recharge takes between one and five hours depending on the ambient air temperature, humidity, inlet water temperature, etc.

Since November, we have observed that the compressor runs for an hour each day or even less in January, which should come as no surprise given that we're in Sydney and are discussing the summer months. (The compressor is wall mounted and is so quiet that it's actually difficult to know when it is on: the noise of the drip from the condensation tray is easier to register than the low hum from the compressor itself.)

In terms of power generation, 17 February, 2016 has proven to be our best day, with 9.7 kilowatt-hours produced. On our west facing roof, generation exceeded 1kW between 11am and 4:30pm (AEDT, so about two hours either side of solar noon). At a first blush then, we generate more than enough energy on a sunny day to provide for all of our hot-water needs and much more besides.

Our challenge has been to align water heating with the availability of this "free" electricity.

Controlling the Heat Pump

The Sanden heat pump has a blockout time mode (this is something I looked into before purchasing the unit). This is as simple as it sounds: it sets a

range of times where the heat pump is allowed to operate.

So, I set the blockout time to be between 4pm in the afternoon and 11am in the morning in the expectation of hot water production when power was likely to be "free" and, coincidentally, the ambient air temperature was likely warmest (thus ensuring optimum conditions for heat pump operation).

And mostly, this worked. Mostly. In practice, what happened was that

we sometimes ended up with no hot water at all.

The reason is simple:

the blockout time window is too short and/or does not align with our water usage patterns.

The user and installation guides for the system state that hot water generation starts when either of two conditions is fulfilled:

- "The water heating cycle operation starts automatically when the residual hot water in the tank unit becomes less than 150 litres"; or,

- "The system will run once the power becomes available and the temperature in the tank drops below the set point of the tank thermistor".

So, for example, if there is 151 litres of water in the tank and this water is 1ºC above the thermistor set point, the heating cycle will not start.

Consider a tank in this state at 4pm, with the compressor blockout timer set from 4pm through 11am. The 151 litres is not enough last through to 11am and, as a result, the kids do not have enough water for a hot bath and the morning shower is properly cold. Of course, the next morning, at 11am, the tank is fully recharged and so the system never remains in this state for more than one day.

In summary, setting the blockout mode for the heat pump to align with free power generation works most of the time, but not always, and that's a problem when we're talking basics like hot water. For this reason,

we have disabled blockout mode as it does not provide us with a reliable hot water supply. Since we disabled blockout, the compressor is typically switching on at about 8pm each night meaning we are paying for hot water generation at 40c per kWh on days when we know we are selling 4 kWh of power to the grid at 8c per kWh. This stings!

Improvements to the Sanden Control System?

The

firmware in the Sanden unit is pretty basic. Blockout is just that: the system will not generate hot water outside of the allowed hours. At a guess, I'd say that the firmware is implemented with nothing more than a timer. There are, I think, a couple of approaches that might easily address the problems.

Force a Tank Recharge at a Set Time

On 61 days of the seventy nine days between 20 Jan through 13 April (today) between 1pm and 2pm, our solar panels generated in excess of 1kW. That's enough to drive our heat pump.

From this observation comes a simple requirement: I should be able to tell my system to

just switch on at 1pm every day and fully recharge the tank irrespective of the normal rules outlined earlier.

Conceptually, this is simple to implement and, for someone like myself (who monitors our power generation), would be ideal because it would:

- have provided me with "free" hot water for 77% of the days since January 20;

- eliminate cyclic 'cold shower' days because the tank is recharged every day;

- run the compressor once a day, just as it does usually does at present; and,

- mean I would not have to go up a ladder and fiddle with blockout mode.

I am not suggesting that the "start at time" should be the only time the compressor is allowed to run - instead, on heavy usage days, the compressor will also run at other times to deal with demand.

It seems to me that this would be a very simple firmware change and it would have shaved an additional $20 off our Q1 electricity bill.

Implement 'Look-ahead'

In our example, the blockout window - from 4pm through to 11am - is rather long. The longer the blockout window, the greater the chance of a cold shower.

A look-ahead function would check: the current time against the start of the next blockout time; the length of that blockout period; and, the tank state. The idea being that the system could make an educated guess that the hot water will be exhausted and

initiate a recharge before the blockout period starts.

The devil here is in the detail with the need for considerably more complex firmware. In our case, this would be neither as simple nor as effective as the simple "switch on at" time.

Make Blockout an Weak Signal

Cold showers could be eliminated by making "blockout" a weak signal: a preference rather than a hard and fast rule.

That is, the heat pump would be

allowed to operate during the blockout period in order to provide continuity of hot water supply.

A weak blockout mode would have to maintain a balance between the need for hot water, the strong preference to run the system during normal hours and the need to limit as far as possible the number of times the heat pump is started/stopped (so as to maximise the lifetime of the compressor).

So, as a first stab, I suggest that in blockout hours, the compressor be allowed to run only when the hot water supply is critically low and even then, for a limited time only in order to give a partial tank recharge. This should ensure that at the end of the blockout period, a full tank recharge happens.

Once again, the devil is in the detail here: we're talking about firmware with considerably more complexity than the current system. Once again, for us, this would not be as effective as the simple "switch on at" time discussed above.

Wishful Thinking

These suggestions are just musings about how the Sanden unit could be improved. They will not make any difference to us, unfortunately.

My Water Heater, My Solar Panels and the Internet of Things?

Thus far, I have limited my suggestions to changes that might be made to the Sanden Heat Pump's firmware. In this section, I will briefly discuss the implications of an Internet of Things as it relates to our solar panels, hot water system, and other domestic appliances.

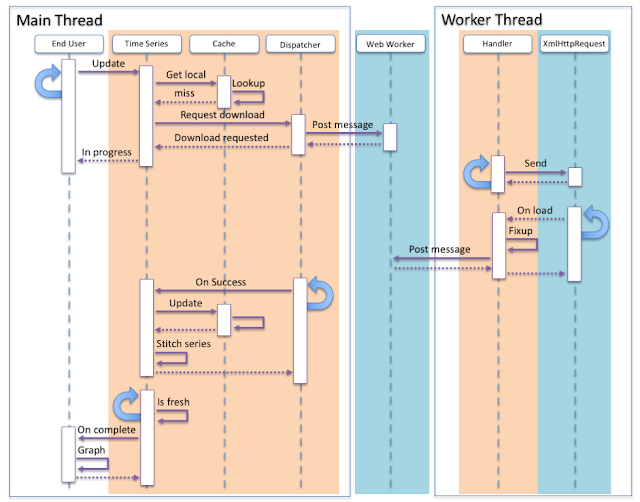

The Sanden unit has no remote control. Neither does the heat pump nor our solar panels, nor our fridge for that matter. These devices are 'dumb' in the sense that they are not connected to any network and therefore they cannot be remotely monitored or controlled. Although connected to the Internet, the MyEnlighten energy monitoring system tells me what I generate, but not what I am using. In short, there's a huge gap here that will, over time, be filled by the Internet of Things (IoT).

The vision for the IoT in the home is that every device will be connected, usually to a 'hub' that provides means of access and automation. A common example being smart lights that are controllable from a smart phone.

As

Nest has proven, the IoT has a massive role to play in home energy management. Sadly, nascent "hub" products such as Nest's Revolv

have not proven to be a good investment. Looking past this and other similar examples, the benefits of home automation mean that this will happen. But not in our household yet.

In our home, we manually program our washing machine to come on in the early afternoon and try to do the same with our dishwasher. We do this in order to maximise the use of our own power, just as we tried with the Sanden Heat Pump.

In future, these devices will tell the home automation system that they need to be switched on at some time and for how long. The system will know how much power each device draws (it will have learned through experience). It will also know how much power is available for free and, again through experience, a knowledge of the weather forecast and data shared devices in the same region, how much power is likely to be available. It will then decide on the optimum order for device activation and, when the time comes, tell each device to start.

Bring it on, I say.

Which Shade of Green?

In my opening, I mentioned shades of green. Well, since installing solar and the Sanden system, our electricity usage for a family of five has fallen to less than the average for single person. That's pretty good, yes?

No. It's not good enough: we are drawing between one and two kilowatt hours per day for the Sanden unit when I can show that on 77% of the days since January 20th, this is unnecessary.

The Sanden "Eco®" Hot Water Heat Pump System is excellent: it is amazingly quiet and very efficient. In other words, the engineering is superb.

It is the "programability" of the Sanden unit that turns out to be a bit of a disappointment.

Postscript

Having written this, I decided to do the obvious thing and re-enable the blockout mode using a wider time window. I'm going to try 10am through 4:30pm and see how that goes - if we endure cold showers again, I'll make it wider still.

BTW, in April, at 4:30pm our panels get shaded - our generation falls from about a kilowatt to nil in about a minute so that's why I have chosen this time.

Second Postscript

Tried that, did not work. It is only possible to set a start and end time for blockout. So, I had to widen the operational time range. Not real happy with that.

Third Postscript More in this post.